|  |

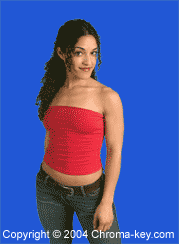

DescriptionA demonstration of chroma-keying,

often used in television shows and newscasts

to present a live person in front of a completely different background.

The basic idea is to photograph (or film) the person (or object)

in front of a single-color background.

Usually, bright blue or green backgrounds are used,

because these are easy to distinguish from skin and hair colors.

An exact lightning of the background is essential, to allow the

later processing steps to safely detect the background color.

DescriptionA demonstration of chroma-keying,

often used in television shows and newscasts

to present a live person in front of a completely different background.

The basic idea is to photograph (or film) the person (or object)

in front of a single-color background.

Usually, bright blue or green backgrounds are used,

because these are easy to distinguish from skin and hair colors.

An exact lightning of the background is essential, to allow the

later processing steps to safely detect the background color.

The algorithm works as follows. The input image is compared with an image of the background (bluescreen) color, and a mask image (white/black) is contructed from this. The positive mask has white for all blue pixels, while the inverted negative mask has white for all 'person' pixels. An AND-operation of the positive mask and the background mask leaves background pixels for all originally bluescreen pixels, while the corresponding AND-operation of the negative mask and the original input image leaves only the 'person' pixels. Just click on the corresponding image viewer components in the schematics to view those intermediate images.

A final OR-operation is then used to merge the intermediate image into the single combined output image. The following three images show the original input image (girl in front of bluescreen, image provided courtesy of chroma-key), the background image, and the resulting combined output image:

As you can see, the algorithm as implemented here depends critically on an absolutely exact lightning of the background, because the test for background-or-not is made on the exact pixel value. Even a very small difference will result in the equality test failing, and the corresponding pixels will be taken from the bluescreen instead of the background image.

See the next applet for an improved algorithm, that uses a range-based comparison and a smoothed-out transition between foreground and background.

Run the applet | Run the editor (via Webstart)